Social interaction is a basic need at any stage of life. Multiple studies support the idea that maintaining close relationships and feeling part of a community helps prevent cognitive decline.

Our social environment becomes more important in advanced ages. This is a crucial moment where many tend to isolate themselves and lose those family connections. Cognitive decline can affect memory, attention, processing speed, and even our ability to navigate spaces and make decisions.

The result is a cascade effect where physical changes make social interaction increasingly difficult, leading to isolation that can accelerate mental and emotional decline.

Technology That Helps Us Communicate in Advanced Ages

With these challenges in mind, Mauricio Ramírez, a mechatronic engineer and assistant research professor at Tec de Monterrey, and his team saw an opportunity. During the Health Longevity Symposium organized by the School of Engineering and Sciences in collaboration with TecSalud, Ramírez presented projects that could help older adults maintain an enriching social life.

His team has developed a brain-computer interface that can decode imagined words, allowing people to communicate solely through their thoughts.

“The system is designed for people who cannot produce speech,” explains Ramírez. “However, they can imagine and through this control the computer interface.”

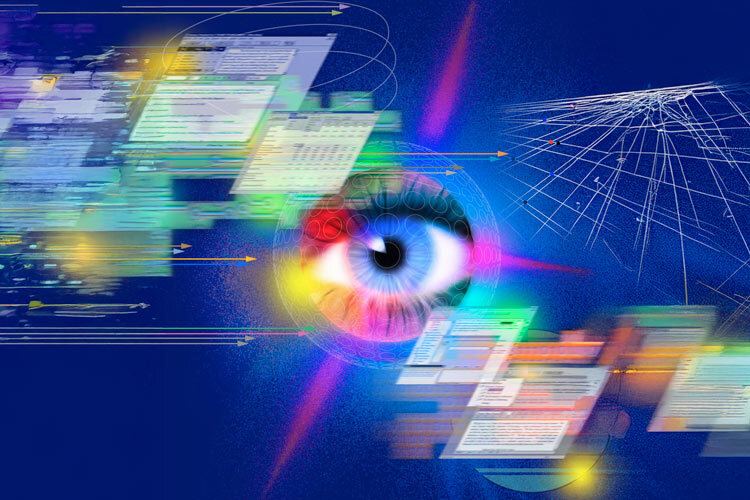

The signals are read using the electroencephalogram (EEG) technique by placing electrodes on a person’s scalp. This flexible cap records the brain’s electrical activity.

For them, the most direct application of this technique is the brain-computer interface that his team has developed. These systems establish communication between people and an external device using only information from the brain.

“The intention is that people can control them at will,” said Ramírez, including a wheelchair or prosthesis as examples.

For people with hearing or speech disabilities, the brain-computer interface can change how they communicate. The system works by having users imagine specific words like “yes,” “no,” or the numbers “one,” “two,” and “three.” Through a virtual assistant Ramírez’s team created, users navigate the three levels to communicate basic needs.

First, the system asks if the person wants help. Then it offers three categories: nutrition, health, or entertainment. Finally, users can specify their exact need using the three available numbers; for example, if they want to eat, have finished eating, want to watch television, or listen to music.

Recognizing that brain signal decoding has limitations, Ramírez’s team has also developed a communication system using eye movements.

“It’s a more flexible communication system in which we not only have discrete options, but can write more freely,” explained Ramírez.

The system includes predictive text features that learn from the user, gradually building a personalized vocabulary. Ramírez admits that for now, the prototype is slow but the possibilities are significant: “Sometimes we perceive it as too slow,” he says. “But for a person who cannot say a single word, this helps.”

For now, the students in his laboratory are the ones who have tested the systems and prototypes. As research progresses from laboratory to clinical applications, the focus is shifting toward real-world implementation with older adults who most need these tools.

Ramírez mentioned that they were beginning to work with the National Institute of Geriatrics and Rehabilitation to ensure that their work reflects the realities of this population. Although the interface can have multiple applications, their focus is on impacting the social lives and preserving the autonomy of older adults.

Interested in this story? Want to publish it? Contact our content editor to learn more: marianaleonm@tec.mx