Can empathy be taught to young people in highly vulnerable settings using WhatsApp and artificial intelligence (AI)? That’s precisely what a team of researchers set out to explore in a study that tested the potential of these technologies to foster socioemotional skills through an AI tutor and mobile phone interaction.

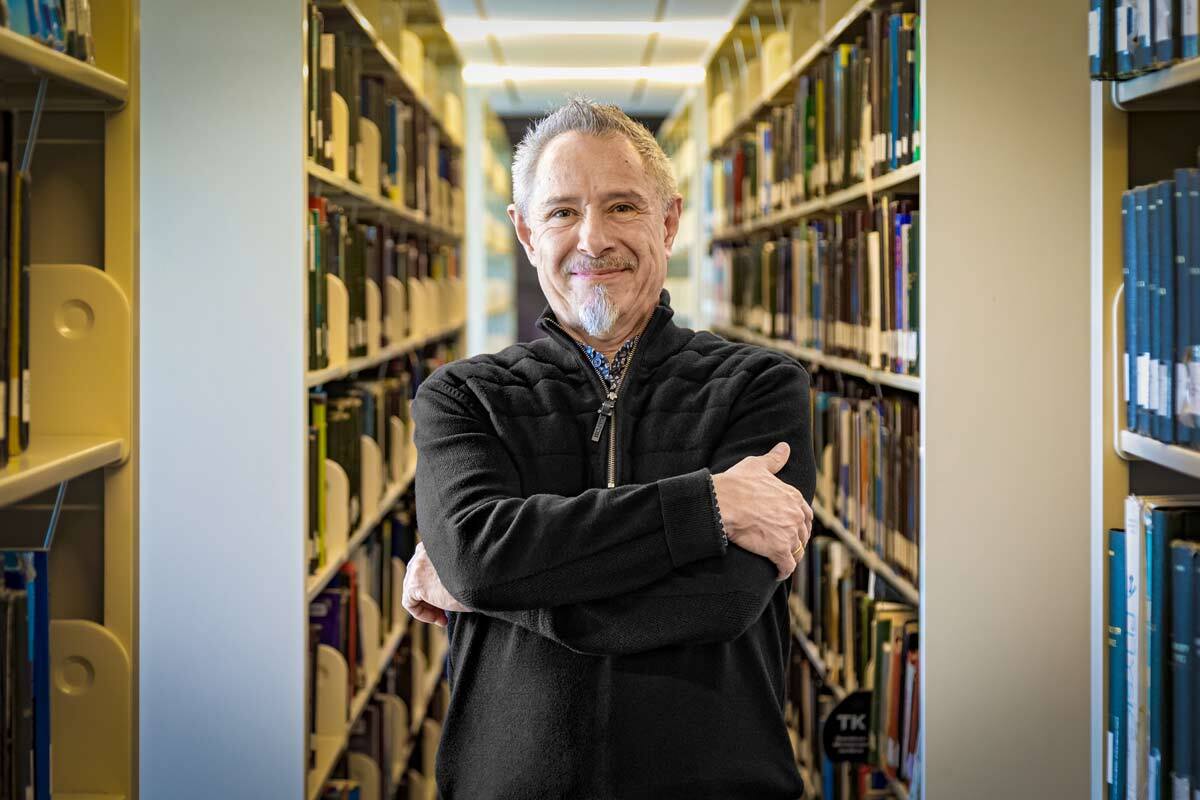

Luis Enrique Portales, Director of Experimentation and Impact Measurement at the Institute for the Future of Education (IFE), explained that the study involved around 450 young participants enrolled in CONALEP schools and community centers across eight locations in Nuevo León, Mexico. Each student was given a smartphone with mobile data and access to WhatsApp to ensure they could interact with the AI tutor.

“We designed an AI agent with a distinct personality, blocks of knowledge, and lessons it was meant to deliver to the students,” Portales said. The project featured three types of interventions: one with high human interaction, another with minimal human involvement—both involving a human tutor to guide the AI’s use—and a third without any human guidance, where students communicated directly and independently with the AI.

After analyzing data collected by the AI tutor over the course of the six-week pilot, the researchers found evidence of improvement in the students’ socioemotional learning. The project, carried out under the field name El Camino, was led by IFE researchers in collaboration with organizations such as High Resolves Group and Educación para Compartir, and supported by Fundación FEMSA.

AI tutor helps young people develop empathy

Studies suggest that low levels of empathy may be linked to violent behaviors —such as criminal activity— especially when it comes to a lack of cognitive empathy, or the ability to understand what others are feeling. Developing empathy skills could be crucial for conflict resolution and empowering individuals to propose solutions that improve their communities and build a brighter future, says Portales.

“It’s easier to foster empathy in adolescents than in adults—it’s a great age to teach them a whole range of socioemotional skills,” the researcher adds.

The project involved a conversational educational intervention, meaning the AI tutor engaged in dialogue with the teenagers to guide their learning through structured knowledge blocks and eight lessons focused on different aspects of empathy. During these exchanges, the agent—named Carla—was designed with a youthful personality while maintaining a mentoring tone. Carla asked questions, explained key concepts, and provided personalized feedback to the students.

The study also included models with high and moderate levels of human involvement, in which instructors actively promoted the use of the AI. In the high-intervention model, mentors were responsible for teaching all the content programmed into the AI agent, using the AI tutor as a support tool to reinforce lessons, essentially acting as a teaching assistant. The low-intervention model was based on the assumption that students would first review the concepts with the AI tutor, then use that knowledge to spark new discussions in class. For example, they might walk into the classroom and be asked, “What did you learn with Carla today?”

There was also a model with no human intervention, where students were given a device preloaded with the AI’s WhatsApp contact and instructed to talk to the assistant about topics they found meaningful. In this setup, there were no associated classroom sessions, and interaction with the AI was entirely self-directed. At the end of the process, researchers conducted interviews with the participants.

The researchers found that the most significant gains in empathy learning occurred at both ends of the spectrum—within the high- and low-intervention models. The experiment helped shed light on how AI can be adopted and the kind of impact it can have under different approaches to building this skill.

Building a strategy to measure empathy

The project assessed three types of empathy: emotional empathy, the ability to recognize and connect with other people’s emotions; cognitive empathy, the skill of understanding what someone else is thinking or feeling; and behavioral empathy, which involves acting and responding based on what one understands and feels from another person—that is, how empathy translates into real-world actions within a community.

Using these three dimensions, the researchers trained an algorithm with machine learning and natural language processing techniques to analyze roughly 18,000 conversations between the participants and the AI tutor. Participants also underwent weekly assessments to track their progress over time.

“We created an entire evaluation rubric—what it looks like when someone speaks about emotional, cognitive, or behavioral empathy at different levels,” Portales explains. Using this rubric, conversations were categorized by empathy type and then rated on a development scale from one to ten.

This strategy allowed the team to conduct both descriptive and comparative analyses between the different intervention models and community contexts, such as schools or community centers. For instance, the impact was greater in community centers, likely due to students’ intrinsic motivation to learn.

In total, 776 devices were distributed, of which 444 were activated and 398 showed user activity involving empathy-related interactions. The results showed that all three types of empathy saw an increase, and while the average gain was modest, rising from 5.8 to 5.9, it was considered meaningful by the researchers given the short duration of the intervention, the measurability of the change, and the insights it offered into the differences between models.

AI agent leaves students with a positive overall experience

The experience of chatting and learning with the AI tutor was comfortable for the students and was met with a high level of acceptance, 84.4%, according to surveys conducted by the AI assistant itself. In addition to learning about different types of empathy, students appreciated being able to have conversations on a wide range of topics whenever they wanted, without fear of judgment. In some cases, these discussions delved into emotional or deeply personal subjects, which helped increase engagement.

To that end, several measures were put in place to ensure privacy and data protection. All analyzed conversations were anonymized, and informed consent was obtained, including confidentiality agreements signed by the students’ parents. As a safety precaution, the AI tutor was trained not to respond to sensitive topics; instead, it would redirect users to seek help from a responsible adult. “Whenever such conversations occurred, it would say: go talk to a teacher, family member, or school counselor.”

Among instructors, experiences with the AI varied. Some felt that it diminished their authority or served as a kind of competition in the classroom. Others, however, embraced it as an educational tool, using it to support their teaching, spark ideas for projects, and help answer students’ questions.

As part of this pilot program, the young people involved in the models with human intervention designed and carried out community projects where they applied what they had learned about empathy. In total, 65 projects were created, each aligned with the three dimensions of empathy, with the AI tutor acting as a creative advisor throughout the process.

Portales shared that the next steps for the AI assistant—now named EMI (a nod to the Experimentation and Impact Measurement division at Tec)—will involve testing it in three new application areas. These trials will target different audiences, objectives, and personalities. For instance, EMI could serve as a digital mentor for instructors, support youth employability efforts, or promote emotional well-being practices.

“These tutors show us how to speed up teaching and learning processes, and also how to identify social patterns more accurately,” he says. “EMI can support educators, shift its tone, and adapt to different contexts.”

Interested in this story? Want to publish it? Contact our content editor to learn more: marianaleonm@tec.mx