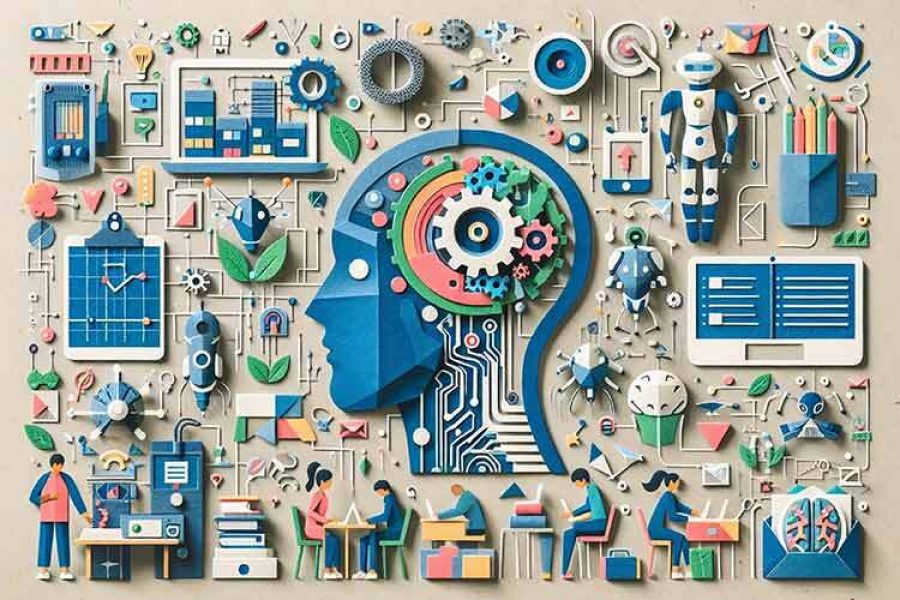

Fraud, misinformation, demotivated students and even a dystopian future is what many people believe will bring the use of Artificial Intelligence. To avoid such scenarios, it is important to promote the correct use of AI, but also to educate and empower all of society –not only privileged groups– in order that this tool brings well-being to humanity.

During the IFE Conference 2025, held by the Institute for the Future of Education (IFE), three professors and specialists in AI for Education, shared their reflections on the benefits of using this tool, but also on the threats and limitations that should be considered when using it.

The panel Gearing AI4Good: Education,Professional Practice, and Ethics was composed of Yoemy Waller, CEO of Healthcare IT, a company that specializes in AI for health; Vivian Kretzschmar, doctoral researcher at Louisiana State University, where she addresses issues of AI for well-being, and Claudia Camacho, research professor at IFE.

Taking the Risk (and Losing the Fear)

At the opening of the debate, Claudia Camacho mentioned science fiction films that have generated collective fear, such as Matrix or Terminator, films where humanity is surpassed by a destructive Artificial Intelligence. Or Wall-E, where people become couch potatoes, people without physical activity who spend their whole lives in front of a screen.

In this sense, Vivian Kretzschmar recalled that humanity has always been afraid of every new technology, and that does not mean that we cannot benefit from it. “Let’s look at the last century: radio, people were afraid of it; cinema, people were afraid; mobile phones, fear; social networks, super fear, but they are still very useful, right?”

“We only need an adjustment. And above all, guidance. We need to be literate about how to use AI correctly, because if we don’t use it, we will be left behind. There is no way to control it. I would say it is a freedom of choice and we must be smart about it. We must know that we are taking a risk but we must assume it, there is no way to avoid it, as with all technologies in life”.

Restrictive or Permissive Approach?

In the last three years, AI has burst in like an unstoppable whirlwind, transforming many processes and tasks. The concern about its use is palpable, so much so that at first some reactions were prohibitive. Claudia Camacho mentioned the case of Italy, which banned the use of ChatGPT, but the following month lifted the block because it was impossible to apply it.

For Yoemy Waller, given the risks in health, none of these technologies should be banned or avoided, because generally the benefits are much better than the risks.

“I prefer to have a doctor who uses technology and AI, who learns, understands and tries to discover my condition to make a diagnosis and solve things, than a doctor who is looking at a book from the 50s, who may not have the latest knowledge. Who will be better prepared to help me be healthy?”, she questioned.

“We have to embrace AI in all areas and allow people to experiment, understand how it works, discover things, instead of simply banning it, because we would be doing harm to students and humanity at the end of the story, because the only way we can prosper is by moving forward with technology,” she said.

For Vivian Kretzschmar, there must be clear distinctions on how to implement and use AI in classrooms.

“We can use it in a permissive, moderate or very restrictive way. A permissive approach would be to use it freely to generate ideas, correct and generate content, which has its risks and benefits. But we must opt for a moderate approach: allow students to use AI as a reference point to generate ideas, but the conclusions of a work and how they arrive at them should be their own original work, the product of their understanding”.

She gave a personal example of its use: as a doctoral student, she had to read a book a week for an entire semester, plus the secondary literature. They were books of up to 400 pages in a second language.

“Naturally, when reading in another language I am much slower, but AI helped me a lot to understand those books. For example, one of them was the autobiography of Benjamin Franklin. How could I understand the language of Benjamin Franklin, which is 18th century English? Probably not on a first reading”.

See the Positive Side and Close Gaps

Are there inherent threats that AI will create uninterested students? The panelists’ answer was that, yes, there is even a risk of losing the capacity for critical thinking. However, if used responsibly, AI can do a lot of good. That is why it is necessary to focus on its positive aspects.

For Vivian, AI allows to do some functions much more efficient and faster, to focus on the topics that really matter, and can improve daily progress, making academics, researchers and students more efficient by giving them equitable access to educational opportunities.

“If we use AI in a positive way, it can be a tool that provides equal access to education for all people, regardless of background, socioeconomic level, culture, gender, or migratory origin. I consider that AI is a significant step to reduce that gap. It no longer matters where we come from, but it does matter where we are going”.

A Trial and Error Tool

AI is a powerful tool, capable of transforming the world in unimaginable ways. But like any tool, its use depends on the will and vision of the person who handles it.

Yoemy Waller commented that all new technologies always have a trial and error process, even “sometimes you have to take two steps back to take one forward. That’s how it works. We shouldn’t be afraid of making mistakes. All of us users are discovering these technologies as much as the people who develop them”.

“Our purpose as educators and leaders is to define how we want to use it. Will it be an instrument to empower all of society, to bring well-being to every corner of the planet? Or will it become a privilege of a few, exacerbating inequalities and injustice?”

“As educators we can decide or at least propose how AI can be used and applied to empower us all, all of society, not only a certain group of people who are already well-off, but so that it really empowers us and brings well-being to all society. We can make artificial intelligence a force for good, a catalyst for progress and well-being for all humanity,” she added.

Claudia Camacho mentioned that in that sense, the IFE has studied the best way to minimize the risks and maximize the benefits of implementing AI in universities, specifically in developing countries.

There is Much to Do

For the three teachers, there is still much work to be done, especially in the field of ethics, which is fundamental in the development of AI and, therefore, they urged that norms related to the privacy of personal information continue to be created and implemented.

But there is also the part of not doing harm. “For example, in the field of health, a technology should not be developed that causes harm to patients or that may create a greater risk than they already have,” Waller mentioned.

Then there are other ethical issues, such as avoiding information biases that some models or databases have. For Waller, this is something important in health issues, especially in the US, because the data is mostly oriented to white Americans, without representation of Latinos, which although there are many, that data is not collected. So, those models may be biased, so developers should pay attention to make sure they are being ethical and considering the diversity of people.

“The part of ethics, is something that AI must embrace and that we must promote because regulations are not enough, so we must foster in every citizen this awareness of the benefits and risks of AI. But in the end, all this will be possible only if we collaborate, I consider that teamwork is the right way for AI, and it is the way towards the good of all humanity,” Camacho concluded.

Did you find this story interesting? Would you like to publish it? Contact our content editor to learn more at marianaleonm@tec.mx