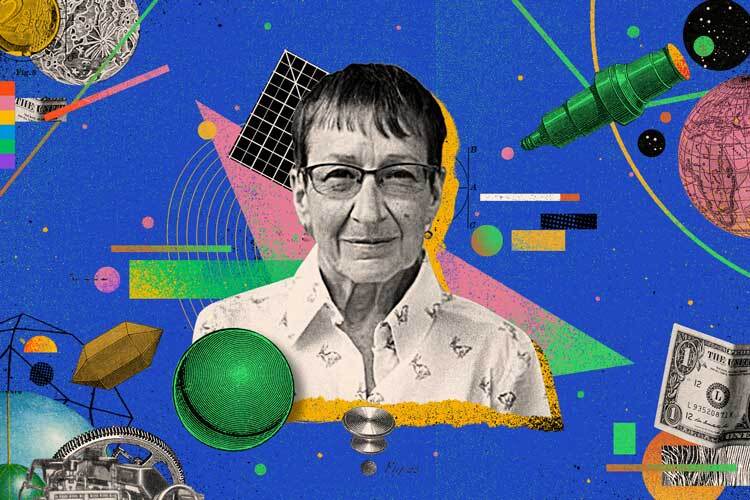

Ivanna Martínez, a Tec de Monterrey graduate, worked with the Fair Network which encourages research on Tech and Feminist Artificial Intelligence, to do a study to find gender biases, focused on evaluating menstrual monitoring apps.

These gender biases are a replication of social constructs of inequality, such as racism or sexism, through technology, according to Paola Ricaurte, a professor and associate researcher at the Department of Media and Digital Culture in the School of Humanities and Education at Tec de Monterrey.

“Technology could perpetuate inequality in societies that have racism, classism, sexism, and other types of discrimination. They serve as a sort of a social measure for how we relate to ourselves, to others, and the environment,” claims Ricaurte.

Ivanna’s team, with Ricaurte’s assistance, found several kinds of biases in these menstruation monitoring apps, which have grown in popularity lately within the health measurement app sector that had more than 6 billion users globally by 2020.

The findings will be published later this year and were presented at the Tec de Monterrey, Tec Science Summit, research conference.

Biases in Apps

Martínez’s research team discovered four major areas of biases while monitoring apps such as Clue, Flo, and Period Tracker.

The first category addresses the reinforcement of prejudices and assumptions in the design of menstruation applications, including the usage of colors, pictures, or symbols like hearts and flowers that are typically associated with femininity.

Ivanna says that among the people surveyed, those who used these applications believed that a more varied and inclusive design was necessary.

The fact that these technology apps are predominantly made for cisgender, heterosexual women excludes those who identify with other genders and introduces another prejudice in terms of function and gender portrayal.

Paola additionally points out that since different individual bodies menstruate in distinct ways, these applications’ algorithms, primarily developed for people with regular menstrual cycles and consequently inappropriate for non-binary people or those with irregular cycles, are less precise.

“The apps are designed predominantly, not just because they seek to encourage reproductive function but also because they support stigmas associated with menstruation by providing tips on how to hide it or preserve your physical appearance,” says Martínez.

The research also addressed issues about app users’ data privacy. Ivanna points out that despite their unease about losing control of their information, users of this technology still use it since there aren’t any other options.

The Importance of Combating Biases in Technology

According to Paola, biases in technology are modeled after actual circumstances and may exist not just in the technology output but even when these new technologies, such as Artificial Intelligence, are being developed.

“We must strive for more diverse teams, both in terms of gender and life experiences. People from various cultural origins and languages end in less prejudice based on race, social status, and other factors,” says Ricaurte.

Involving a community in technological creation is one of the strategies the professor emphasized, particularly when the results are aimed at the same group of people.

The ideal strategy, for menstruation apps, for example, would be to increase the opportunity for more individuals to take part in their design, development, and release.

“I find it interesting that even if they don’t fully understand what the problem is, the users of these apps are aware that something isn’t operating correctly. For us, it was crucial to demonstrate that the gender construction used to develop these applications is characterized by these social and cultural prejudices and does not correspond to the real world,” continues Ricaurte.

Another key component in improving awareness in this area is education, which Paola underlines as crucial for recognizing and fighting prejudices and any sort of discrimination, both in real-life and electronic environments.

“The more people who understand how technology works, the more chance we have to create fairer and more respectable things for everyone,” concludes Ricaurte.